Spotting fake images with AI

Adobe and University of Maryland researchers use a deep-learning neural network to detect subtle methods now used in doctoring images

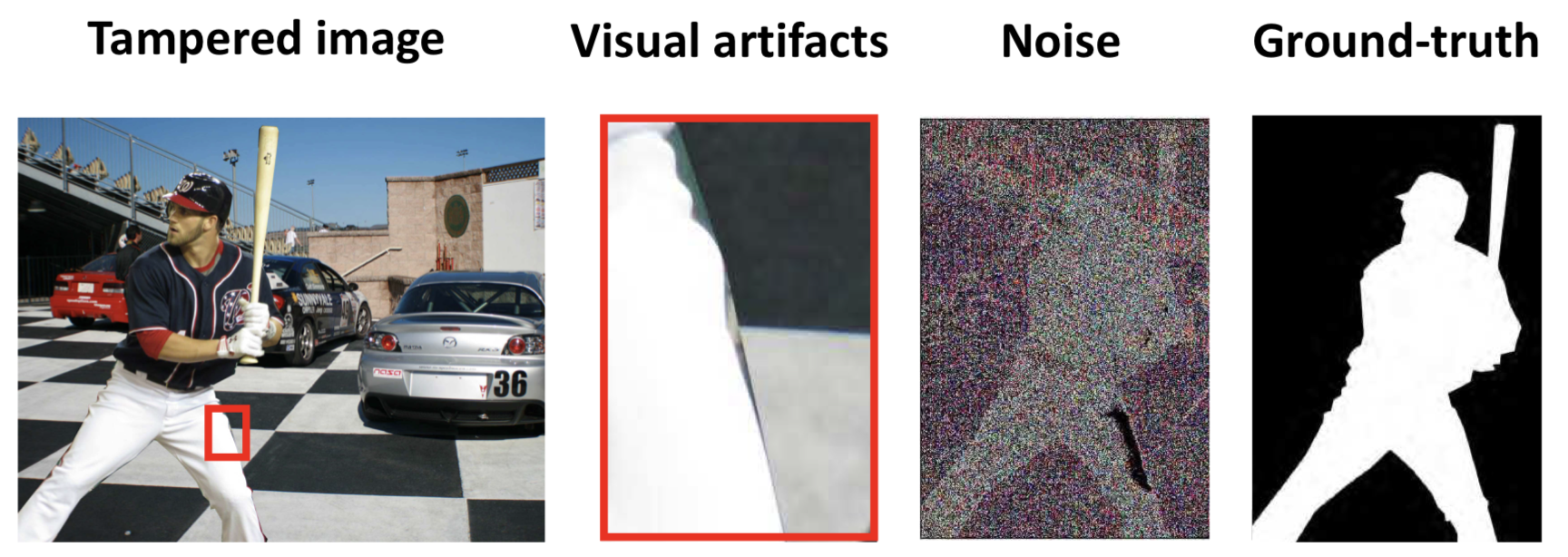

This tampered image (left) can be detected by noting visual artifacts (red rectangle, showing the unnaturally high contrast along the baseball player’s edges), compared to authentic regions (the parking lot background); and by noting noise pattern inconsistencies between the tampered regions and the background (as seen in “Noise” image). The “ground-truth” image is the outline of the added (fake) image used in the experiment. (credit: Adobe)

Thanks to user-friendly image editing software like Adobe Photoshop, it’s becoming increasingly difficult and time-consuming to spot some deceptive image manipulations.

Now, funded by DARPA, researchers at Adobe and the University of Maryland, College Park have turned to AI to detect the more subtle methods now used in doctoring images.

Forensic AI

What used to take an image-forensic expert several hours to do can now be done in seconds with AI, says Vlad Morariu, PhD, a senior research scientist at Adobe. “Using tens of thousands of examples of known, manipulated images, we successfully trained a deep learning neural network* to recognize image manipulation in each image,” he explains.

“We focused on three common tampering techniques — splicing, where parts of two different images are combined; copy-move, where objects in a photograph are moved or cloned from one place to another; and removal, where an object is removed from a photograph, and filled-in,” he notes.

The neural network looks for two things: changes to the red, green and blue color values of pixels; and inconsistencies in the random variations of color and brightness generated by a camera’s sensor or by later software manipulations, such as Gaussian smoothing.

* The researchers used a “two-stream Faster R-CNN” (a type of convolutional neural network) that they trained end-to-end to detect the tampered regions in a manipulated image. The two streams are RGB (red-green-blue — the millions of different colors) to find tampering artifacts like strong contrast difference and unnatural tampered boundaries; and noise (inconsistency of noise patterns between authentic and tampered regions — in the example above, note that the baseball player’s image is lighter, for example, in addition to more-subtle differences that can be detected by the algorithm — even what tampering technique was used). These two features are then fused together to further identify spatial co-occurrence of these two modalities (RGB and noise).

Topics: AI/Robotics | VR/Augmented Reality/Computer Graphics